Tips for keeping Azure Kubernetes Service costs down

Things to look for when tuning Azure Kubernetes Service costs

Kubernetes is awesome, but can be a costly service to run. In many cases it pays off to take a look at the various components and settings to see if there are ways to save money.

How much can I save?

The amount of money you can save depends on a lot of things: type of environment (staging, production), workload, application architecture, infrastructure design, etc. But before you dive into any of the tips, you will need to start with a baseline and document at least a couple of things:

- Last months bill

- Current billing period forecast

- Size of the Kubernetes cluster

- Current/ base load of the cluster

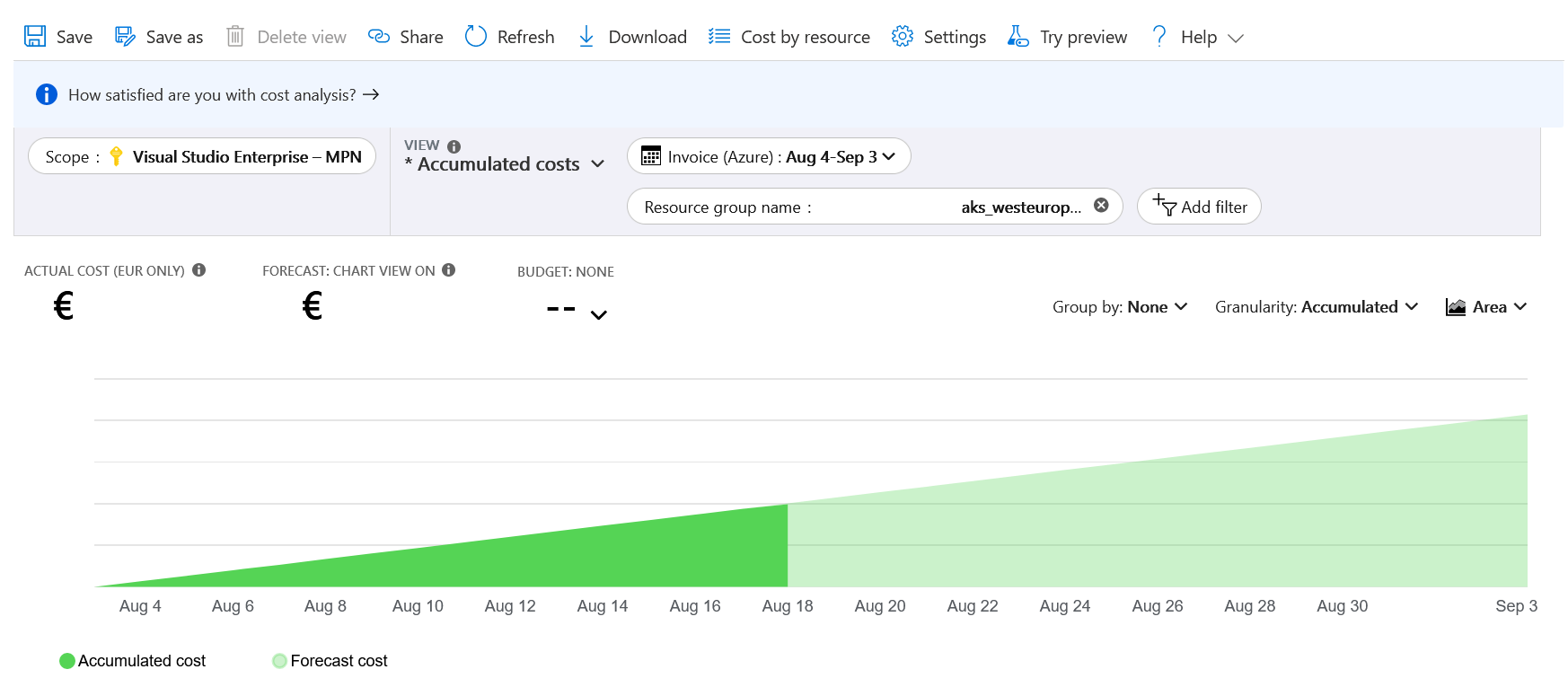

Any Cloud Vendor has tools for cost analysis, Azure has Cost Management and Billing providing accumulated reports and breakdowns by resource. This way you can get some insight in the total costs of your Kubernetes applications. You can use filters to include all resources related to your AKS cluster (any container registries, storage accounts, Log Analytics, dependent services like KeyVault, etc.).

Make sure you have some cool reports ready to show off the fruits of your cost saving labor :).

Tips

Virtual Machines are the foundation the Azure Kubernetes Service runs on. Every AKS cluster node is a virtual machine in a “behind-the-scenes” scale set. And you get billed for every single one of them. If it is running that is. So the first three tips relate to the Virtual Machine Scale Set.

Tip #1: Scale down

Maybe you won’t need the full power of the application 24/7? In that case, you can scale down or even completely shut down the Virtual Machine scaleset outside of operating hours. If you don’t scale down completely, you will need to make sure you scale down the Kubernetes resources too. Otherwise Kubernetes will try to keep all the parts running on fewer nodes and you will most likely be flouded with Out Of Memory and deployment errors.

Tip within a tip: instead of manually stopping a VM Scale Set, use Azure Automation or a (scheduled) Azure Function to automate this.

More info: https://docs.microsoft.com/en-us/azure/automation/automation-solution-vm-management

Tip #2: Reserved Savings

Reserved Savings are available for certain VM series, so if you are going to run a cluster for a year or longer this could save a lot! You can check the Azure Calculator and see if reserved savings are applicable: https://azure.microsoft.com/en-us/pricing/calculator

Tip #3: Mix ‘n Match

Maybe you don’t need premium disks or compute optimized machines? Or specific GPU optimized nodes for certain Kubernetes applications or services but not all of them? In that case, multiple nodepools allow you to mix and match different VM types. Kubernetes “taints” and “tolerations” allow you to schedule the correct workload on the correct nodes.

More info: https://docs.microsoft.com/en-us/azure/aks/use-multiple-node-pools

Tip #4: Logging

In many cases Kubernetes apps are based on distributed architectures and logging is done somewhere central. Maybe using Application Insights or a 3rd party solution. But handling large volumes of log entries requires substantial infrastructure and can become quite expensive. I have seen cases where the cost of distributed logging almost equals the cost of running the software!

So make sure every loglevel is configurable (runtime), so you can silence most of the noise during normal operation. When troubleshooting, levels can be cranked up for short periods of time to enable all the detail needed. Also, log entries should be useful and meaningful. In distributed scenarios this means that you need to be able to correlate entries to allow for real event tracing.

Tip #5: Memory usage

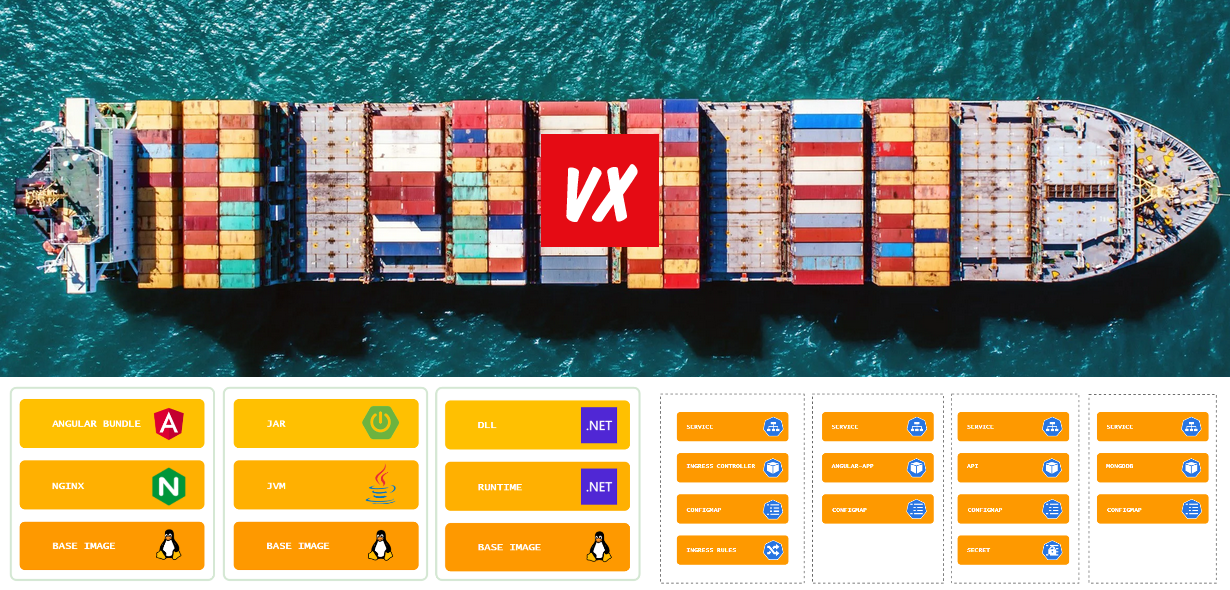

Usually Kubernetes applications are cloud (native) applications. Maybe even full microservices. Yes, containers are more lightweight than VMs (they share the OS), but making sure applications run memory optimized is still very important and requires careful planning and tuning. In case of Kubernetes:

- Pick a Kubernetes optimized container image based on a container friendly OS;

- Define resource requests and limits for your Kubernetes deployments. If you don’t do this, Kubernetes has no way to schedule them efficiently;

- If you run .NET Core, make sure you build the image using the SDK image, but run the application using the Runtime image (multi stage Docker build FTW);

- If you run Java, make sure you configure the JVM or try a container optimized JVM like OpenJ9;

- Or run your Java workload using a container optimized stack like Quarkus.