Recap of day 3 of the LEAP event (day 1, day 2).

Day 3 will be (almost entirely) about containers and Kubernetes. The day starts with a high level overview of where we are heading with all of this.

Containers/ microservices

The real world is messy, so the regular sales pitch on Kubernetes and containers does not apply. A little bit of history:

Software deployment used to be a manual deployment: create a VM, SSH into it and deploy the code after building and packaging the code.

Netflix started to talk about Immutable Infrastructures and the idea is to build complete machine images. Images are immutable, so they were never updated. Software updates involved building a new image and throwing away the old ones.

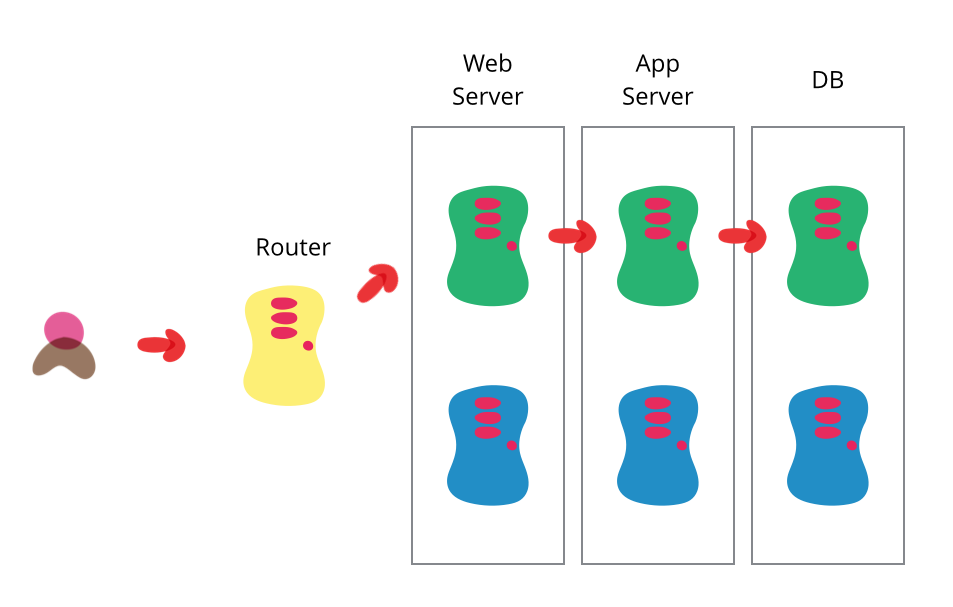

Besides the packaging with machine images, Netflix started working on Blue/Green deployment patterns. This meant two groups of services can exist at one time (blue and green) and that would not really work with real machines or VM’s.

Source: https://martinfowler.com/bliki/BlueGreenDeployment.html

Docker came around and people started noticing. Container images ensured code run everywhere (not only on “my machine”) and deployment became predictable and reliable. But containers manage software on a single machine and were hard to manage across machines.

Orchestration became a requirement, not only for distribution. Application Health Checks and restarting applications became another important requirement. Failing machines make Kubernetes orchestrate the reliable transfers among container images.

Dependencies are hard and make our software brittle. Kubernetes forces you into patterns where you get rid of dependencies. Orchestration offers Service Load Balancers and that enables scale at every layer (back-end and front-end are not required to operate together on this)

What is the role of Operations in all of this? A smaller operating surface to support, because everything is packaged up in an image. There is less to operate:

- Hardware has been abstracted

- The OS has been abstracted (no specific OS support needed)

- The cluster has been abstracted (by Azure AKS for instance)

So only the application layer needs operational support. But how do we handle the legacy applications?

How about connectivity between On Premises VM’s and the cloud? Azure Kubernetes clusters support Azure Virtual Networks, so connectivity should not be too hard. Express Route or normal VPN enable all kinds of scenario’s.

Another option can be Selector-Less Service, ideal for projecting local services into your Kubernetes cluster.

Connecting through an Azure Load Balancer is another option on bridging the old and the new worlds.

To create cloud native applications, we need cloud native developers. It is not all about cool new tech.

Visual Studio Code extensions make working with Kubernetes (cloud and local) easy: https://github.com/Azure/vscode-kubernetes-tools

How do you control the interaction between developers/ops and Kubernetes? Open Policy Controller (custom controllers that handle API request into the Kubernetes service (part of Open Policy Agent).

Cluster Services force a standardized way of doing things (if you deploy into the cluster, you will get the standard monitoring). So instead of teaching people how to monitor, we enable this upon deployment into the cluster.

Containers as an Azure primitive

Azure Container Instances: the easy way to host containers. Small in size and oriented towards application workloads. Is is a container primitive, micro-billed (by the second) with invisible infrastructure.

ACI and AKS:

- ACI will not solve your orchestration challenges

- But will work together with Kubernetes

- And ACI remeains simpler to maintain

Virtual Nodes (a.k.a. Virtual Kubelet) is an addon for AKS. You can tag certain pods for out-of-band scaling with ACI!

ACI and Logic Apps:

- Intended to be used together, especially for task based scenarios

- Integrate ACI and Logic Apps, for example for ad-hoc sentiment analysis

- BOTS (PR Review Bot)

Samples: https://github.com/Azure-Samples/aci-logicapps-integration

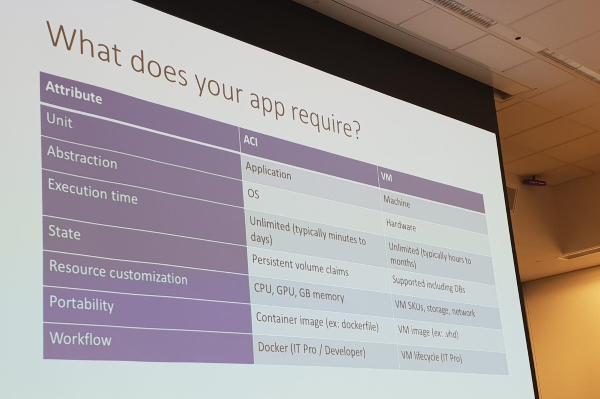

ACI and Virtual Machines:

- ACI is not intended to replace VM’s

- ACI usally cheaper compared to VM’s

- Guest customization required (network, cpu, etc.)? Provisioned VM’s are a better choice.

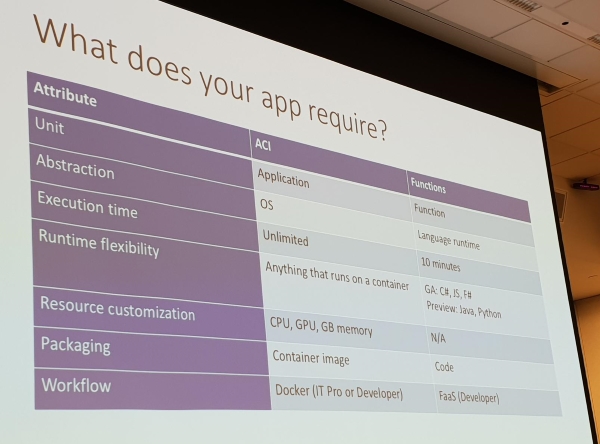

ACI or Functions:

- App security might be decisive

- Level of customization (more customization, ACI better match)

- Or combined: ACI + Functions = Simple orchestration

Service Fabric + Mesh

Focussed on Microservices and the balance between business benefits (cost visibility, partner integration, faster future releases) and developer benefits (agility, flexibility):

- Any code or framework

- DevOps integration

- integrated diagnostics and monitoring

- High available

- Auto scaling/ sizing

- Manage state

- Security hardened

- Intelligent (message routing, service discovery)

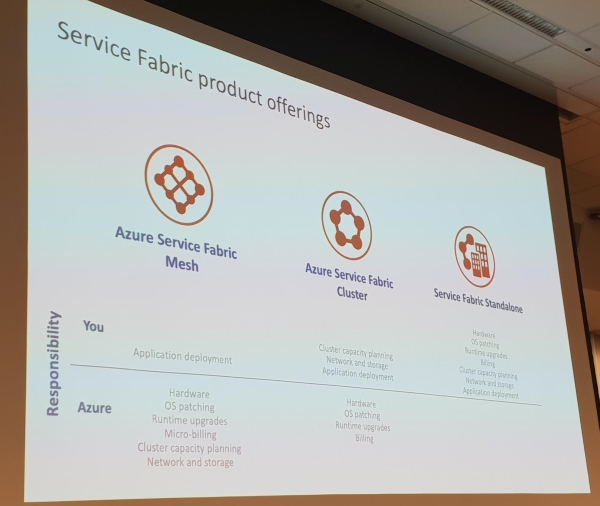

Service Fabric is a complete microservices platform and compared to Kubernetes can control the entire ecosystem. Service Fabric is available Fully Managed, as Dedicated Azure Clusters and on a Bring Your Own Infrastructure basis.

Service Fabric Mesh is the Azure Fully Managed option.

Operational Best Practises in AKS

Also available from the docs.

Cluster Isolation Parameters:

- Physical Isolation: every team has its own cluster. Pro: no special Kubernetes skills or security required.

- Logical Isolation: isolation by namespace, lower cost. Separated between Dev/Staging and Prod.

- Logical Isolated environments are a single point of failure. You should apply a good mix between logical and physical.

- Kubernetes Network Policies: coming soon to AKS

- Kubernetes Resource Quotas: limit compute. Every spec should have this.

- Developers are better left coding and not be bothered too much with Kubernetes. Cross Functional or Kubernetes Team are the answer here.

Networking:

- Basic networking: uses Kubenet, drawback is hard to connect to on-premises networks due two IP addressing (0.0.0.0/8).

- Advanced networking: uses Azure CNI.

- Services can be public (which is the default scenario), but also private (Interal Service, only VNet or ExpressRoute access).

- Ingress as an abstraction to manage external access to services in the cluster. Multiple services, single public IP.

- Use Azure Application Gateway with WAF to secure services. This would require a private loadbalancer. More info on this here.

Cluster Security:

- Key risks include elevated access, access sensitive data, run malicious content.

- How to secure? I&AM through AAD.

- Just released: API to rotate credentials. Coming soon: API to rotate AAD credentials.

- ClusterRoleBinding on Groups (GUID from AAD) is recommended of course. This saves on administration effert.

- There is currently no way to invalidate the admin certificate. If an admin leaves put still has access to the kubeconfig (which holds the entire certificate) you are in trouble.

- Coming soon: PodSecurityPolicy and NodeRestriction.

- Kured Daemonset can be used to schedule node reboots.

Container Security:

- Use a trusted registry

- Update images

- Scan images and containers regularly

- Use Monitoring

- Third party tools: i.e. https://neuvector.com/kubernetes-security-solutions/

Pod Security:

- Least Priviliged: https://docs.microsoft.com/en-us/azure/aks/developer-best-practices-pod-security

- Best practise: https://github.com/Azure/aad-pod-identity#demo-pod

Tenants and Azure Kubernetes Service

Slicing and Dicing, Hard and Soft Multitenancy in Azure Kubernetes Service.

All security concepts still apply, no real magic going on in Kubernetes. And you don’t need multi tenancy most of the time:

- Kubernetes does not give you multi-tenancy, AKS also doesn’t. Yet.

- There are tools you can use, patterns to practise.

- But you need the normal tools: Azure Networking

- And in addition AKS RBAC.

If you are not going to physical isolate your clusters, you have to handle multi tenancy your self in your SaaS application.

https://azure.microsoft.com/en-us/resources/designing-distributed-systems

Soft Multitenancy: AKS + Best cluster isolation practises

ACI Virtual Nodes get HyperVisor protection. Normal Kubernetes nodes run VM’s and share the same “Docker” security: no true Kernel isolation.

AKS Engine: Units of Kubernetes on Azure. The AKS product being built, but can also be used to run AKS yourself and configure the way the clusters run (maybe run it using a micro hypervisor like Kata.Containers.

Kubernetes Operating Tooling

Terraform: deploy Kubernetes clusters, pods and services across environments and clouds. https://www.terraform.io/

HELM: package manager for Kubernetes. It is a tool for managing Kubernetes charts. Charts are packages of pre-configured Kubernetes resources. Charts can describe complex apps; provide repeatable app installs. https://helm.sh/

Draft: generate Docker files and HELM charts for your app. https://github.com/Azure/draft

Brigade: Brigade is a tool for running scriptable, automated tasks in the cloud as part of your Kubernetes cluster. https://brigade.sh/

Kashti: a simple UI to visualize Brigade. https://github.com/Azure/kashti

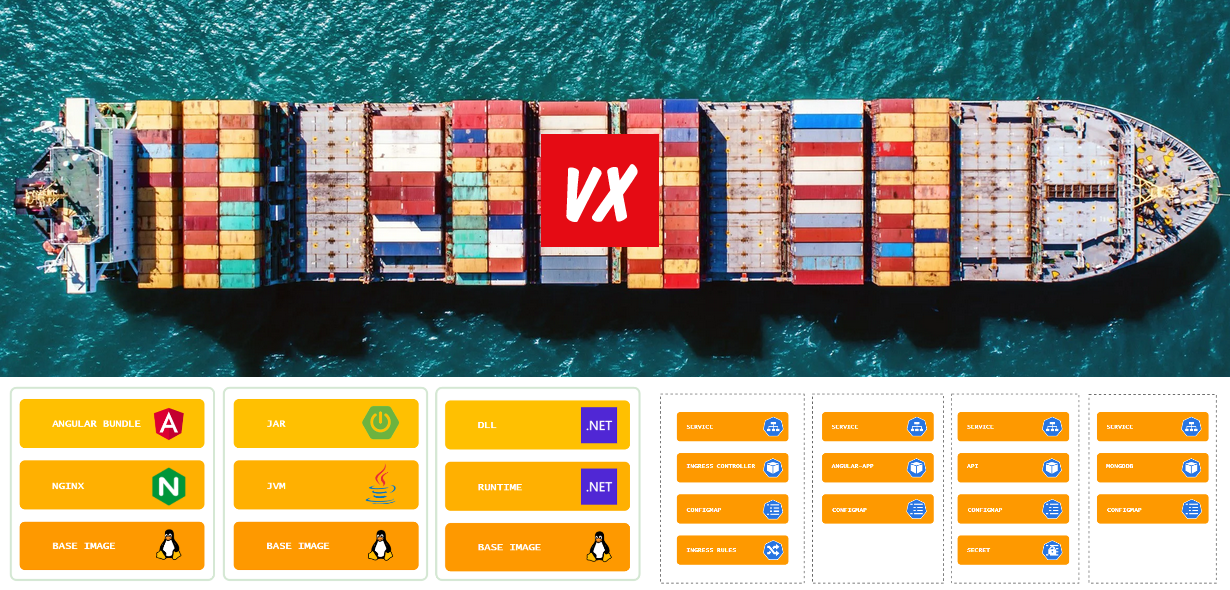

Cloud Native Application Bundle: A spec for packaging distributed apps. CNABs facilitate the bundling, installing and managing of container-native apps — and their coupled services. https://cnab.io/

Porter: a cloud installer. https://porter.sh/